Classifieds website starts small and grows over time. It becomes popular when the number of ads, users and page views increases. Relying on our experience we all start small with shared or dedicated web hosting. When the website grows and becomes a bit slower we need server optimization for classifieds website. This is normal practice because we want to try an idea without spending too much money on the server before we know how much server resources it will use in future.

Generally classifieds websites with more than 10k ads need to be optimized. In this article we will show several ways to optimize your classifieds website.

You will learn:

- Why does your server use more resources as it grows?

- On site optimization, website structure, image sizes.

- On server optimization, caching, rate limiting, DDOS and bad bot protection.

- Find hidden bad bots and block them

- Optimization and protection by adding a reverse proxy.

- Case study: How we doubled server performance and serve 8 million requests each month.

Depending on your server control possibilities you can use all or several of these optimization tips to speed up and protect your classifieds website.

Table of Contents

Why does the web server slow down?

Understand how web server works

What is a web server? Web server is a computer that stores and serves your website's content. There are 2 types of content.

Static content which is a regular file that is served as is without any processing. For example images, stylesheet files and JavaScript files are static content.

Dynamic content which is generated on each request. Web page content such as ads, categories, and users are generated using programming languages such as PHP from data located in the database. Data can be written and read from a database. For example when classified ad created that data is stored in a database. When the ad page requested data is retrieved from the database and formatted to the HTML page then displayed to the user. Process of generating page content is dynamic and performed each time when someone views any page on your website.

Every web page has one dynamic content and 50-200 static content. For example if we view a category page on a classifieds website which has 100 ads in category then 100 images, several CSS and JavaScript files used. These are static assets. Page itself is 1 HTML document and it is dynamic content.

Static content is not using much CPU power. Dynamic content uses lots of CPU power and memory storage to generate a web page.

In order to optimize web server performance we need to address issues related to dynamic content creation. There are several ways to reduce dynamic content creation such as caching, and rate limiting. We will describe each of them below in this article.

Before starting server optimization let's dig deeper to find the real cause of server slowdowns.

Increase in number of site visitors slowing down web server

When you start a classifieds website it has very few ads. Generally less than 100 ads. After marketing and continuing adding new ads your website starts to grow. Number of ads reaches 10k, the number of visitors and page views grows relatively. More ads you have, the more search traffic you get, more users start to post ads, that in return bring even more visitors.

If this scenario happened to your classifieds website then you are lucky that you built a successful website that is useful to the community and grows organically over time.

More ads and more page views means more dynamic content your server needs to generate in order to serve your visitor. More visitors means servers need to generate many pages at the same time. For example 100 pages need to be generated in 1 second. This is a huge load for a web server.

Solution: To reduce server load you can reduce the number of ads. For example by reducing ad life to half, making ad posting paid, removing expired ads from your database. To reduce dynamic page generation you can add server side page caching. These options will be explained below in this article.

Web crawlers, bad bots, server benchmark, deliberate attacks

Apart from regular users your website will be crawled by many spiders/bots. More content you have, the more pages the spider needs to crawl regularly. For example if you have 10k ads, your website will have up to 500k indexed pages in search engines. Those 500k pages need to be crawled regularly in order to show the latest content in search results. Consider that there are 5-10 popular search engines every month crawling all your web pages then you will get roughly 5 million page requests each month. These are not actual users but search engine crawlers.

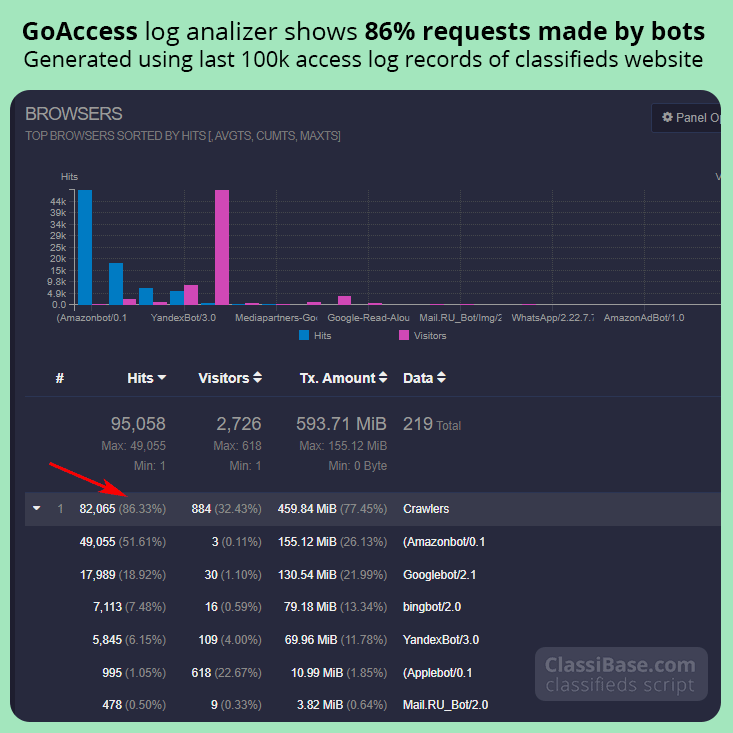

Checking our website logs we have seen up to 80-90% of requests are made by web crawlers. That means bots can make up to 9 times more requests than your regular classifieds website visitor.

GoAccess report shows 86% of requests made by bots. Server optimization detect to block unwanted bots.

Check this report generated using GoAccess log analyzer. Report shows that 86% of requests made by Bots (crawlers, spiders). Report generated using last 100k access log records of live classifieds website. This website gets 300k requests daily, 42k of them users, and other 258k bots (bots use 6x more resources). To preserve our server resources to users we constantly need to monitor and block bad or useless bots.

Web spiders can be good or bad. Good spiders are crawlers of popular search engines like Google, Yandex, MSN, etc. They are indexing your content in order to show in search results and send visitors to your website. In order to serve more real users we can limit the request rate for search crawlers.

Bad spiders are crawling your website to steal some data, learn website structure, search for script vulnerability or deliberately slow down your website. They usually do not obey crawl rules defined in your robots.txt.

Solution: In order to reduce server load, block known bad bots, and limit requests for some search engine bots.

On site performance optimization for a classified website

Classibase is optimized and tuned for better performance. You can view data driven comparisons with WordPress website. There is still a user factor who can adjust website performance by making smart decisions when using Classibase features.

When your classifieds website has lots of ads then you need to make on site optimizations in order to reduce server resource usage. Remember each page is dynamically generated. So reducing server side calculations will generate pages and show it to users faster. This will result in serving more pages without stressing on server resources.

Reduce number of widgets

Widgets used to add extra into the page. By reducing the number of dynamic widgets that list ads, users, categories, locations you can reduce page generation time.

Say for example you have main content for a category page, which is listing the latest ads in that category. And you have a widget on that category listing top ads in that category. This means your category page will use twice the resources to generate a category page. If you further have popular users and all locations widgets on the same page you will use 4x resources for that page. So in order to reduce server load and fasten page generation time reduce widget usage where possible.

For category pages you would only need related sub categories and maybe locations widgets only. For the ad page you will need only related ads widget.

Optimize number of ads on category pages

Category pages list the latest ads in a given category. If you have many ads then you will see pagination at the bottom of the list for more pages. This means to view all ads in that category, users and web crawlers need to go through those pages.

Remember that each page is generated dynamically. So by reducing the number of pagination you can reduce the general number of pages. This will result in less pages needing to be generated dynamically.

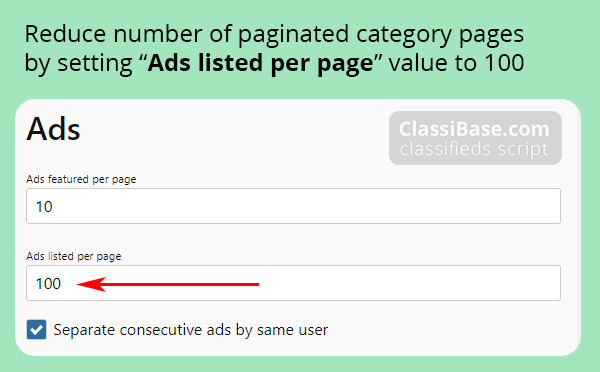

How to reduce the number of paginated pages? Let's assume that you have 400 ads in each category. By default category pages list 20 ads per page. To show all ads in that category you will have pagination for 20 pages.

To reduce the number of paginated pages increase the number of ads listed per page from 20 to 100. This will result in 4 pages to show all ads in that category. By this optimization we reduce the number of paginated pages from 20 to 4. It means 5 times less pages will be generated for categories.

Reduce number of paginated pages in category listing. Optimize page by reducing number of paginated pages. More items per page means less pagination.

You can set the number of ads shown per page from the “Settings” → “Ads” page in the admin panel. Set the “Ads listed per page” field to 100 and click the “Save” button at the bottom of the page to save settings.

Reduce image size variation of classified ads

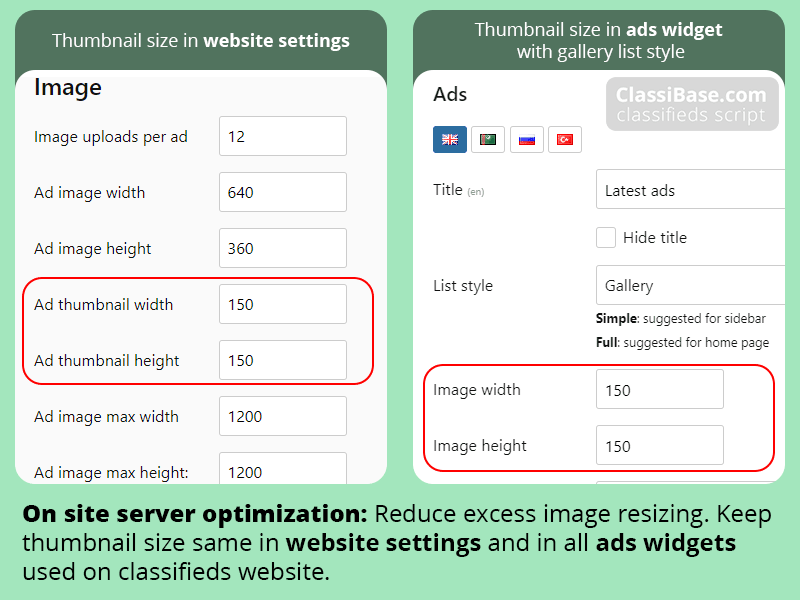

Image resizing is another heavy operation performed on the server side. Users add images to classified ads. When an image is displayed to a site visitor it is resized, stored in a cache folder then displayed. If the image previously resized to required size then it is displayed form cache folder eliminating heavy resize process. If you have too many different image sizes defined on your classifieds website each image is resized to those sizes as requested.

Set same size for all thumbnails to optimize server performance. Few different image sizes leads to few image resize operations.

Optimize server for better performance by reducing image resize operations. To do it use the same size for all thumbnails. Thumbnail size values of classifieds websites are located in following places.

- “Settings” → “Ads” page, “Ad thumbnail width” and “Ad thumbnail height” setting.

- “Appearance” → “Widgets” page all ads widgets located in different widget locations. Check if the ad widget uses list mode “Gallery” or “Carousel” then image width and height settings will be visible for that widget.

How to reduce heavy image resize operations?

By eliminating too many image size variations you can reduce number of image resize operations. We recommend using same thumbnail size in image settings and in all widgets used on your classified ads website.

Learn more about image settings.

Keep database of a classified website clean and small

On classifieds websites all ads have live time. At the beginning you can use a 1 year or 6 month time period for ads to stay longer on your website.

When a classifieds website grows it eventually will have lots of active ads that are too old. At this point you have to reduce the lifetime for your ads to 1-3 months. This is done to keep your website fresh and clean. Nobody wants to see a 12 month old apartment rental or used mobile phone sale ad.

After classified ads expire they still stay in database for these reasons:

- The ad owner can extend it and renew it. This is usually ads that offer service or sell products.

- If a user adds the same ad again as a new listing then the system can detect it renews an old expired ad automatically. This is done for reducing spam and repeated ads.

- Google still kips expired ads in their index and users coming from google will see that the ad is expired. If related widgets are added then users visiting expired ads will see related ads under it.

You can adjust how long expired ads will be stored in the database. To keep your database size small and clean for big websites with more than 10k classified ads we suggest keeping expired ads no more than 1-2 months.

Set small time for live and expired ads for keep database clean. Optimize database by keeping deleting old listings. automatically.

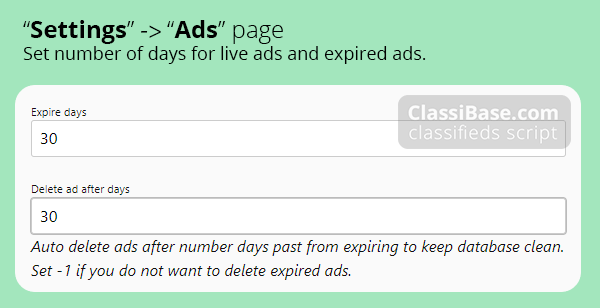

To set time for live ads and time for expired ads:

- Navigate to “Settings” → “Ads” page in the admin panel.

- “Expire days” field defines how long an ad will be live on a classifieds website in days. Set it to 30 days.

- “Delete ad after days” field defines how long expired ads will remain in your web server and database. Set this for 30 days.

Remember smaller databases equals less data to search for and means a faster website.

How to keep classified website database small?

You can keep database size small by reducing life for active classified ads. 1 month period is good time for keeping active ads on busy classifieds websites with size of 10k ads.

How to keep classified website database clean?

Keeping database clean means removing unused and expired data from database. You can set auto delete for expired ads on classifieds website. 1 month period for deleting expired classified ads is recommended period for keeping your database clean.

Check resource and time used to generate classifieds web page

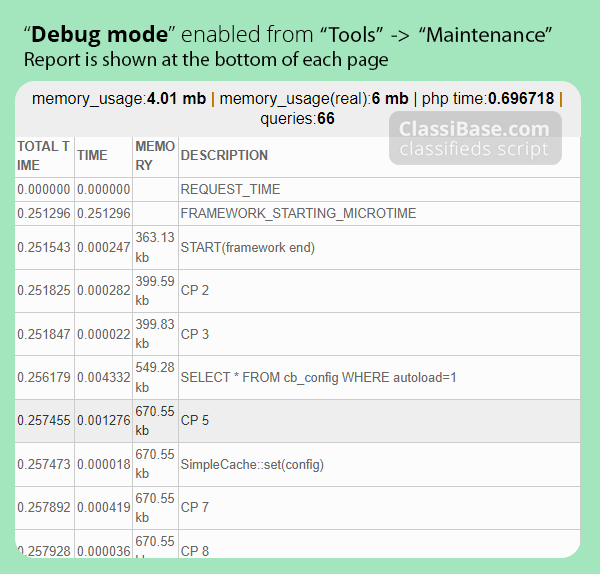

On classifieds websites every page is generated dynamically. To view server side processing time and understand how many resources are used we have “Debug mode” in Classibase classifieds website. Debug mode shows at the bottom of page database queries, time and memory usage.

To enable developer mode, navigate to the “Tools” → “Maintenance” page in the admin panel and Click “Disabled” button to enable debug mode. Debug mode is visible only to classifieds website admins so you can enable it on a live website without any worry.

After enabling “Debug mode” at the bottom of every page you will see one line report related to the current page. By clicking on it you can see a detailed report with database queries and time taken for each query.

Generally Classibase classified ads scripts optimized for fast page generation and uses less resources than WordPress websites. You can view a detailed comparison of resource usage Classibase vs WordPress.

On Classibase page is generated on average 200-700 milliseconds. Some pages like search may take longer. Time taking queries usually cached for faster page load on next page loads.

Debug mode: View queries and time taken to generate a classified website page. Detect where you may need more optimization by analyzing queries.

If for any reason your classified ads website starts to slow down you can always enable “Debug mode” and analyze reports to find slow loading queries. From there you can make a decision either remove some widgets, add server side caching, add reverse proxy to reduce server load or if none of them work move to a web server with more resources.

After analyzing your website remember to disable “Debug mode” from the same tools page where you enabled it.

Server side optimization

Most web servers are optimized for general website usage. For a specific website you need to further optimize the web server by tuning configuration to match your site needs. This includes caching public content, blocking bad bots, protecting web servers by limiting connections per IP or user agent, and limiting response time.

Most of these features are available in the Nginx web server. If you are not using Nginx or do not have permission to edit its configuration then read next chapter for optimizing classifieds website performance using external service (#Reduce server load by adding external service).

Leverage Browser cache

Simplest solution to reduce bandwidth and make website to load fast is using browser cache. Define long cache period in your Apache .htaccess and Nginx config files.

- Website without browser cache will load 500kb data (images, js, css, html) on each page. Will take 5-10 seconds to load.

- Website with browser cache will load only 20kb html file on each consecutive page load. Will take 1-2 seconds to load.

Apache .htaccess rules to use browser caching

You can use .htaccess rules to use browser caching for static files. It is located in root folder where your script installed. Open it and append caching instructions to it.

Try something like this, it says store those files for 1 year (31536050 seconds) in browser cache, you can change that value to store for 1 month if you want:

<FilesMatch "\.(ico|pdf|jpg|jpeg|png|gif|txt|xsl|js|css|woff)$"> Header set Cache-Control "max-age=31536050" </FilesMatch>

Nginx config rules to use browser caching

Use these directives inside server scope.

This config additionally turns logging off. Plus it prevents execution of PHP for not found static files.

# serve static content only

location ~* \.(ico|pdf|jpg|jpeg|png|gif|txt|xsl|js|css|woff)(\?|$) {

access_log off;

log_not_found off;

expires max;

add_header Pragma public;

add_header Cache-Control "public";

add_header Vary "Accept-Encoding";

try_files $uri =404;

}

You can add other extensions for static files as well.

Classified website optimization by adding Cloudflare

When your website has too many page views then your shared hosting may block or warn you about too much resource usage. They may offer to move to a more expensive plan, usually VPS server or dedicated server. Before moving you can resolve the problem by adding free Cloudflare reverse proxy service.

What is Cloudflare?

Cloudflare is a service between site visitor and your host acting as a reverse proxy for websites. It protects your website from DDOS attacks, built-in firewall blocks unwanted bad bots, caching static content reduces number of requests to your website and saves bandwidth. In other words cloudflare reduces load to your web hosting by 20-50% while protecting and speeding up content delivery to site visitors.

Cloudflare has a free tier which is sufficient for most websites. Most protection and optimization features are automated. I will explain how to configure firewalls to block bad bots, caching static content, and compressing static content for faster delivery. Cloudflare is an easy solution to reduce server load.

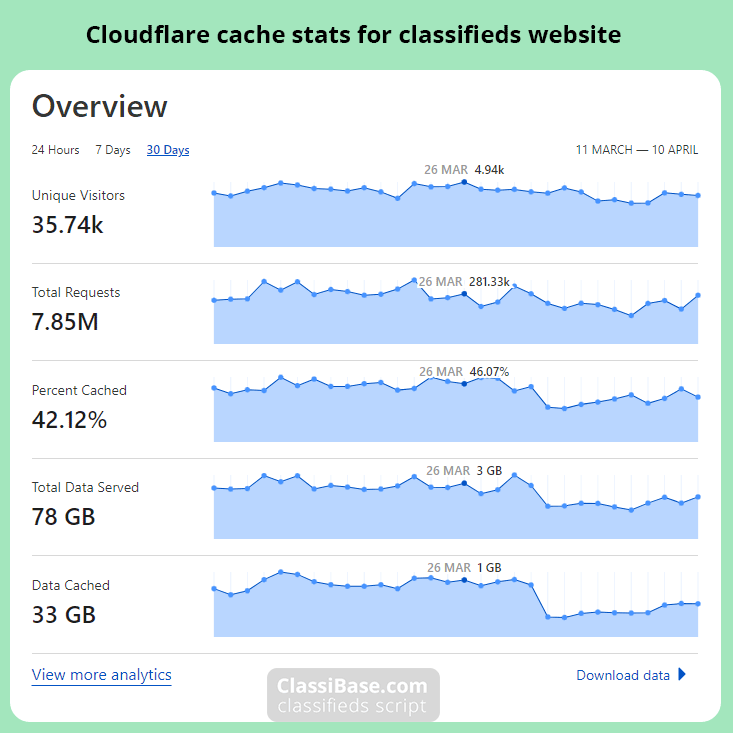

Case study: How we doubled our server performance and serve 8 million requests each month?

We doubled our server performance by simply adding Cloudflare free account. It protects our server from bot attacks and caches static content. Think of it as buffer between your server and user.

Cloudflare cache stats for a classified website. 42% served from cache. On average 8 million requests served per month.

Cloudflare stats for classified ads websites for the last 30 days. On average 35k users generate 300k page views and 8 million requests each month for this website.

You can see 42% of requests (3.2M) and bandwidth (33 GB) is handled by Cloudflare without reaching our server. This means our origin server load is reduced by 42% when using Cloudflare free service. This also means you can keep using your current server without moving to a more expensive hosting tier for now.

Main benefits of Cloudflare for classifieds website:

- Reduce number of requests by 50% with content caching

- Serve static content fast with CDN (content delivery network)

- Block bad bots detected by all websites using Cloudflare

- Build in Automatic DDOS protection

- Speed up page load time by minimizing CSS and JavaScript code

- Protect against automated email collection

- Save your main server bandwidth by 40-60%

- Block or challenge requests by User agent, IP, ASN, Country

- Faster response using closest server to site visitor

Server optimization for classifieds website using Nginx web server

If your hosting uses a Nginx web server and you have access to config files then you can tweak it for high load websites. Shared hosting does not allow editing Nginx configuration. You can edit it if you have a VPS or dedicated server. In any case add your hosting provider if you can edit Nginx config files.

To prepare classifieds websites for high traffic you need to add some default server configuration.

Following snippet reduces time to receive and respond to clients. I prefer putting it in the “http” context of the nginx.conf file. Explanation of each directive can be found in Nginx documentation.

## Start: Timeouts ## ##Closing Slow Connections TO PREVENT DDOS "Slowloris" client_body_timeout 5; client_header_timeout 5; send_timeout 5; # kill if upstream is not responding fastcgi_read_timeout 10; fastcgi_send_timeout 5; fastcgi_connect_timeout 5; ## End: Timeouts ##

Request limiting for search engine bots

Limiting number of requests for search engine bots will allow to serve more requests to real website users. We will limit search engine bots without blocking them.

There is an easy solution to limit requests for popular search engine bots. Best part is it doesn’t require you to make any changes to your server. Instead you can limit search engine bots with robots.txt and custom settings pages.

Limit with Crawl-delay in robots.txt

You can set request limits for Yandex, Bing, Yahoo search engine bots using crawl-delay: 5 directive in robots.txt file. This will allow 1 request every 5 seconds. Which will reduce the number of requests by these search engine bots.

Limit requests for Google

Google crawlers do not obey the crawl-delay directive in robots.txt. To limit the number of requests from google bots you can use rate limiting settings for your domain using google settings page for webmasters. Request rate defined there will be active for 90 days. Then you have to set it again if you want to limit google bots.

Block known bad bots that harm your classified website performance

Known bad bots can be identified by user agent string. We explained bad bots, their purpose and behavior previously in this article.

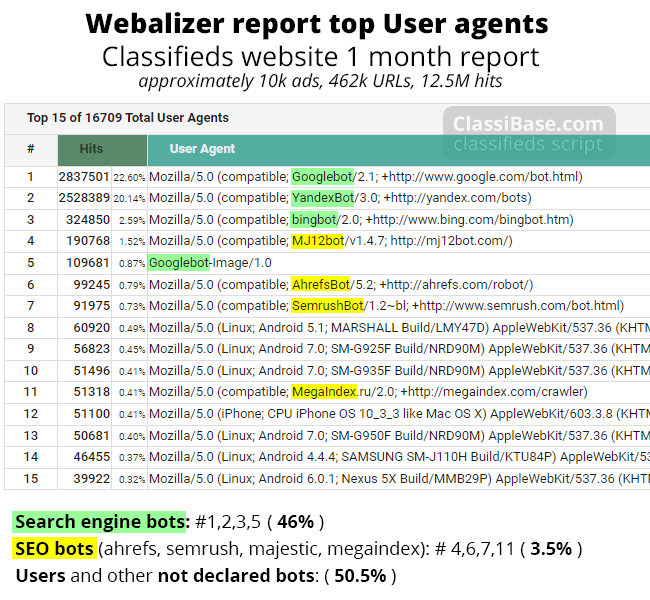

Webalizer report to view good and bad bots. Detect bad bots for better server optimization.

You can find a user agent string of bad bots by analyzing your access log or viewing Webalizer report. Bots are not visible in google analytics.

Why are bots not visible in google analytics?

Google analytics is implemented as javascript code in your web pages. Bots download your HTML files and do not execute javascript on your web pages. Because of not executing javascript google analytics will not record a page view and cannot show bad bots in their reports.

We have a small list of bots that are affecting our website. This list is built analyzing our Webalizer records periodically. Some of them are SEO bots that crawled regularly our classifieds website. We are not using any of them so we prefer blocking all SEO bots because they provide data for our competitors.

We have several ways to block unwanted bots by their user agent string. For example, let's say we want to block SEO bots (mj12bot, ahrefs, semrush, megaindex). They requested 432k dynamic HTML pages in one month. Which uses processing resources of our web server and may slow down classifieds website. Make sure that they appear exactly as displayed in the Webalizer report, pay attention to the letter case as well.

Block bots with robots.txt

With simple directive you can block bots obeying rules defined in robots.txt. Here is example blocking 4 bots. Keep in mind that some web bots, especially bad bots, do not obey robots.txt directives. So it is recommended to block with other ways as well.

User-agent: mj12bot Disallow: / User-agent: ahrefs Disallow: / User-agent: semrush Disallow: / User-agent: megaindex Disallow: /

Blocking bots with reobots.txt is easies way to optimize your classifieds website. Because it can be applied for any web hosting regardless of which server software used on that host. Also editing robots.txt does not require any special permission and can be applied on any shared hosting plan.

Block bots in Nginx

Define bots with regular expressions in Nginx.conf file inside ‘http’ context. Web bot user agent strings are case insensitive here.

### case insensitive http user agent blocking with map detecting bot in one place and blocking per server ###

# https://stackoverflow.com/a/24820722

map $http_user_agent $limit_bots {

default 0;

~*(mj12bot|ahrefs|semrush|megaindex) 1;

}

Here we defined a new variable $limit_bots. We search $http_user_agent for defined bot strings. When found we set $limit_bots to 1, if not found then set it to 0.

Then inside the ‘server’ context for your virtual host define to return a 403 response for bots.

if ($limit_bots = 1) {

return 403;

}

Here we use earlier defined variable $limit_bots. If it is equal to 1, then it is a web bot and we directly return 403 error code without further processing it.

Block bots in Apache

# Start Bad Bot Prevention <IfModule mod_setenvif.c> SetEnvIfNoCase User-Agent "^mj12bot.*" bad_bot SetEnvIfNoCase User-Agent "^ahrefs.*" bad_bot SetEnvIfNoCase User-Agent "^semrush.*" bad_bot SetEnvIfNoCase User-Agent "^megaindex.*" bad_bot <Limit GET POST PUT> Order Allow,Deny Allow from all Deny from env=bad_bot </Limit> </IfModule> # End Bad Bot Prevention

Example code to be used in .htaccess file. Code structure taken from here.

Block bots with Cloudflare

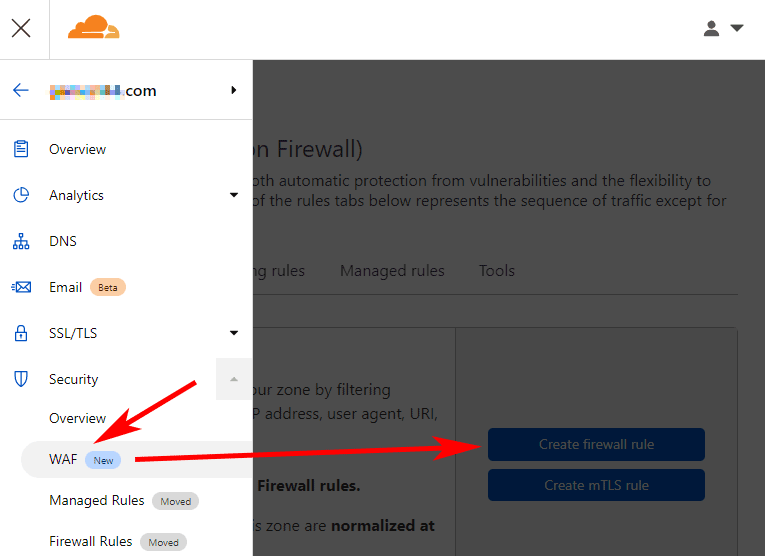

Cloudflare has WAF (Web Application Firewall) where you can block or challenge by user agent. Blocking with Cloudflare firewall optimizes your classifieds website performance by blocking unwanted requests.

In the Cloudflare dashboard navigate to the “Security” → “WAF” page. Click the “Create Firewall Rule” button.

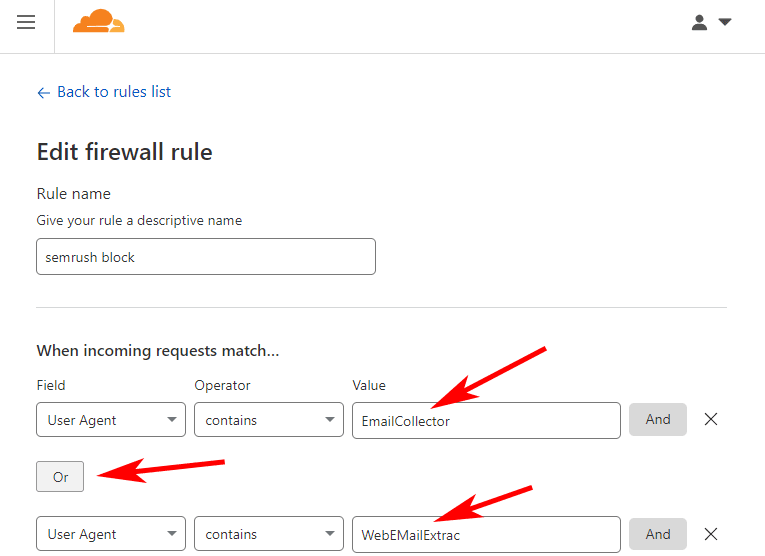

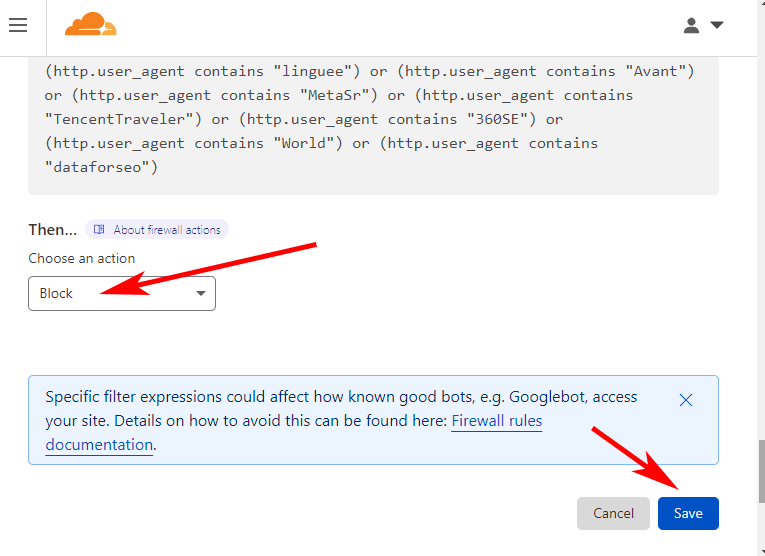

Give a descriptive “Rule name”. Add “User Agent” field, “contains” operator and type case sensitive bot name into “Value” input. Then press “Or” button and add other user agents of bots one by one. Here you can also view various “Field” types including Country, IP, ASN etc.

At the bottom “Choose an action” → “Block”. You will also see “Expression preview” listing all the options that you defined above. Click the “Save” button to save and apply firewall rules.

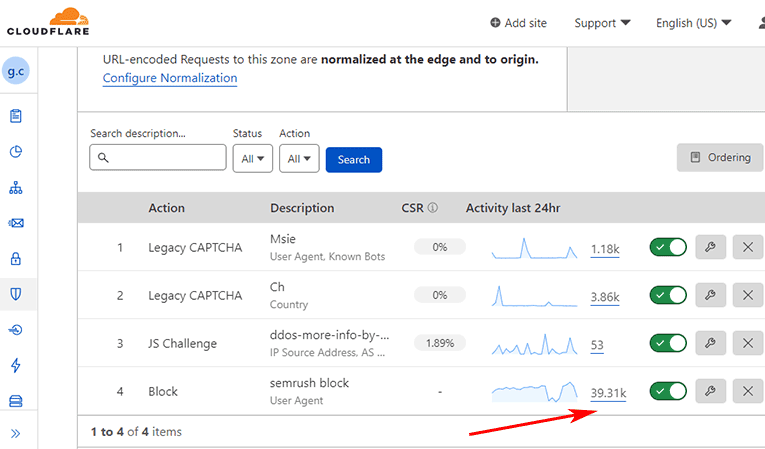

Cloudflare view blocked request count for each firewall rule. Love to see how much Cloudflare blocks to reduce my server load.

On the WAF page you will see the result of your defined firewall rules in real time. Graph and number of detected and blocked requests in matching your criteria for the last 24 hours. In our case we blocked 39k requests of bad bots.

Cloudflare Firewall blocks request using Cloudflare servers without even reaching your server. So this method is best to block unwanted traffic before they reach your web server.

Block unknown bad bots

Now that it is clear how to block known bots that identify themselves in user agent string. There are also bots that hide their identity and pretend to be regular users using popular web browsers like various versions of chrome, firefox, safari etc. They can attack from different IP addresses, use different user agent strings, use different ASN (Autonomous System Numbers) containing a range of IPs.

How to detect unknown bad bot?

To detect unknown bad bot you need to regularly analyze access logs for excess traffic by IP or user agent that is similar to regular user but has too many requests in a short time.

- Analyze access logs and find requests using regular “user agent” string accessing your website at the same rate as search engine bots.

- Filter access log using suspicious user agent and list top IPs. Take note of IPs that have more than 10x requests than others.

- Search google to find ASN related to suspicious Ips. In most cases all bad IPs belong to the same company and appear in couple ASN.

- Block bad bots with ASN or IP that were detected.

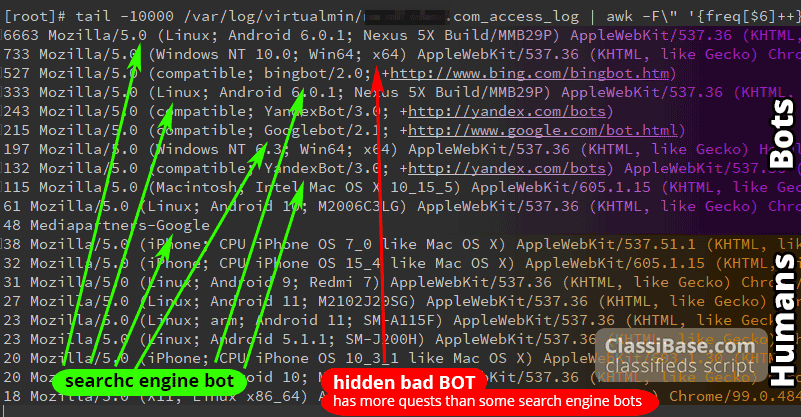

Here is an example of a shell command that analyzes the last 10k records of access logs and lists the most popular 20 user agents.

tail -10000 /var/log/virtualmin/YOURDOMAIN.com_access_log | awk -F\" '{freq[$6]++} END {for (x in freq) {print freq[x], x}}' | sort -rn | head -20;

Hidden bad bot detection by reading access logs with shell command. Server optimization for Classifieds website by detecting hidden bac bots.

Hidden bot using regular User agent detected as “Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/94.0.4606.81 Safari/537.36”. It uses 7% of all last 10k requests. Regular users start from 0.3% as seen in the image.

We cannot block that user agent because we do not want to block real users that use the same user agent. So we need to dig further and find IPs related to that hidden bot.

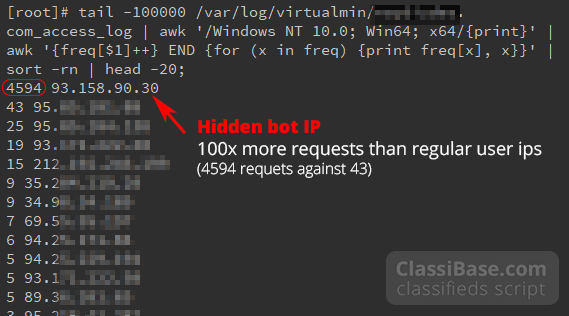

Use this code to find top IPs used by hidden bots. We will use the “Windows NT 10.0; Win64; x64” part of the user agent that is detected as a hidden bot.

tail -100000 /var/log/virtualmin/YOURDOMAIN.com_access_log | awk '/Windows NT 10.0; Win64; x64/{print}' | awk '{freq[$1]++} END {for (x in freq) {print freq[x], x}}' | sort -rn | head -20;

Hidden bot IP stands out with 100x more requests than regular users.

Now that we found IP to block this bad bot.

How to block hidden bots?

Hidden bots hide their identity with a regular user agent string. To block hidden bad bots we can apply these steps:

- Find IP addresses related to hidden bot.

- Find ASN that IP addresses belong to.

- Block ASN with Cloudflare firewall.

- Alternatively if there are only 5-10 IPs found and not using Cloudflare then block with Apache or Nginx web server.

We can find ASN related to this ip by searching on google for example “ASN 93.158.90.30”. Then block by ASN number using Cloudflare. Blocking by ASN is preferred when several IPs are detected and they all belong to the same ASN or company. Blocking with Cloudflare is preferred because they will not reach your server when blocked.

Request limiting by IP

We want to protect our classifieds website from any unusual requests. For this we can assume regular user requests and connection usage.

For example regular user navigation time from one page to another will be at least 5-10 seconds. So they will not jump from one page to another in less than 1 second.

Using this assumption we can block more than 10 page requests per second originating from the same IP. This is called request limiting. We will block dynamic page requests that use PHP for generating pages. Static content like images, stylesheet and javascript do not present much load to the server because PHP processing is not needed for them.

Blocking requests by IP is based on assumption so you can test and tweak this number. If you get any 403 error code while using your classifieds website then you increase this number.

Use this code for the Nginx web server to limit the number of requests. Code has 2 parts.

First part: declares zone with name “one” and request rate 1 per second. This code goes to the “http” context of the Nginx config file.

limit_req_zone $binary_remote_addr zone=one:10m rate=1r/s;

Second part: defines how many requests perform within a zone with name “one”. We will define 10 requests per second. This code goes to the “location” context inside the “server” context. Location context should be related to PHP similar to this location ~ \.php$ {}.

limit_req zone=one burst=10 nodelay;

More info related to limit_req_zone and limit_req can be found in Nginx documentation.

We added code inside the PHP location because that is where dynamic content is generated. As explained above dynamic content uses server resources to generate web pages.

This will prevent all automatic web page crawlers (bots, spiders, load testing tools), originating from the same IP that may slow down your classifieds website. Limiting requests by IP do not need manual addition as opposed to blocking by static User agent, specific ASN or static IP.

What is the difference between request limiting by IP vs blocking by IP?

- Request limiting by IP is dynamic and calculated by the server. Server will track thousands IPs and control their request limit. IPs exceeding that limit will be blocked for short time. IPs that do not reach their limit will not be blocked at all.

- Blocking by IP is static and you need to add all IPs you want to block manually. Server will block only those IPs that were added to the list. IPs in that list will be blocked always.

Caching dynamic content

For dynamic classifieds website server side short time caching can be used. Depending on your website 5-10 minute cache is enough to handle occasional high loads.

Classifieds website has many ads and every minute there may be added or removed some listings. For this reason we can cache it for a short time only. So new content will be shown shortly after the old cache expires.

Caching is also useful to handle consecutive page load of popular web pages like homepage. For example, the home page of a classifieds website may get 100 requests per minute. If we cache dynamic home page for 1 minute then that page will be generated only once and stored in cache. Then 99 times shown from cache without stressing web server.

Other benefit of caching is protection against server load tests. Server load tests perform thousands of requests to server for single webpage in order to test server stability. This test can harm servers as well by performing tests for a long time. Caching will protect your server from such tests and serve cached content after generating actual web page only once.

In Nginx we can use built-in features to use caching. For Classibase classifieds script we do not want to cache logged in users and admin pages. We can exclude them by checking cookies and page URLs.

Caching in Nginx is added in 2 parts.

First part: declare cache location, size and name “microcache” in “http” context.

fastcgi_cache_path /usr/share/Nginx/cache/fcgi levels=1:2 keys_zone=microcache:100m max_size=2g inactive=5d use_temp_path=off; add_header X-Cache $upstream_cache_status;

Second part: goes into “server” → “location” with PHP directives (ex: location ~ \.php$ {}). Here we skip cache for logged in users if cookie “cb_u” exists, if the URL contains “admin” or “login” in it.

### proxy cache START

# Setup var defaults

set $no_cache "";

# Bypass cache for logged in users

if ($http_cookie ~* "cb_u") {

set $no_cache "1";

add_header X-Microcachable-u "0";

}

#bypass admin and login pages

if ($request_uri ~* "/(admin|login)/") {

set $no_cache "1";

add_header X-Microcachable-l "0";

}

# Bypass cache if flag is set

fastcgi_no_cache $no_cache;

fastcgi_cache_bypass $no_cache;

fastcgi_cache microcache;

fastcgi_cache_valid 404 30m;

fastcgi_cache_valid 301 302 10m;

fastcgi_cache_valid 200 5m;

fastcgi_cache_valid any 1m;

fastcgi_cache_min_uses 1;

fastcgi_max_temp_file_size 1M;

fastcgi_cache_lock on;

#use language cookie in cache key to show different locale on homepage

fastcgi_cache_key "$request_method$host$c_uri$cookie_lng$cookie_cb_u";

fastcgi_cache_use_stale updating error timeout invalid_header http_500 http_503 http_403 http_404;

fastcgi_cache_background_update on;

fastcgi_pass_header Set-Cookie;

fastcgi_pass_header Cookie;

fastcgi_ignore_headers Cache-Control Expires Set-Cookie;

### proxy cache END

More about caching in Nginx can be found in official documentation.

FAQs

Why does my website slow down?

Too much traffic will slow down your website. Classifieds websites tend to have thousands listings resulting in close to million different pages. Too many pages leads to too many requests. That is the main reason for slowing classifieds websites over time.

Why does your server use more resources as it grows?

Growing websites means more ads. More ads means more pages. Usually 10k ads can lead to 1 million different web pages. This means 1 million different pieces of information for humans and robots. Having that many dynamically generated pages that are crawled every month by at least 10 different web bots will use a lot of processing resources on your web server.

How to make your classifieds website faster?

When your website starts to slow down try to reduce the number of widgets, keep the database clean by reducing time of live and expired ads. On the server side add Cloudflare reverse proxy, it will reduce the number of requests to your server by 20-50%. After trying all these options, if your web server is still slow then move to a hosting package with more resources.

When should I expect a slowdown on classifieds websites?

Usually with Classibase you will not experience any slowdown up to 10k ads even on shared web hosting. When you reach 50-100k ads then you better move to a dedicated web server to handle high load. In any case as a quick fix try to use Cloudflare free account for reducing load to your server and protecting against Ddos attacks. Additionally block all bad bots because your website should serve users not bots.

Should I worry about server slowdown if my website has less than 1k ads?

No, absolutely not. Website with 1k classifieds ads is very small and any shared hosting can handle it without any problem.

How to improve server speed?

Easiest and free way to improve server speed is by adding reverse proxy and CDN service like Cloudflare.

What is the difference between page load speed and page generation speed?

Page load speed measured by time between request sent to server and fully loaded on user screen. It includes loading of a single HTML document with all images, CSS, Javascript files. Page generation speed is the time taken to generate a single dynamic HTML page on the server side. In the current article we tune servers to improve page generation speed.

Final thought on server optimization for classifieds website

We learned that classifieds websites grow over time. We should make sure to keep its database tidy and clean. Always monitor for automated requests by bots that may slow down classified websites for humans. Consider adding free Cloudflare reverse proxy and CDN service for better protection, speed and reduce requests to your origin server. Server optimization for classifieds website means reducing unwanted requests to main server.

Advanced users familiar with their web server should implement server side caching and request limiting. Also do not ignore on site optimization giving reasonable time for live and expired ads.